In the planning and installation of a networked video system, compression technologies play a critical part in reducing the size of files for transmission over the network and storage.

The screen of a color television tube is coated with a layer of red, green, and blue phosphor elements. Electron guns, one for each color, shoot electrons at the phosphor elements, causing them to glow briefly. Red, green, and blue colors are used, as they can be mixed together to recreate virtually any color. Each cluster of one red, one blue, and one green phosphor element is called a picture element, or pel. The intensity of the electron gun’s electron stream causes the elements to glow at various brightness levels.

To present a complete image, the electron guns light up the phosphor elements by “painting†the screen in lines. The lines for each individual image are painted in two complete passes of the electron guns, which paint the image in alternating lines.

Resolution Lines

The number of phosphor element pels on a television screen is called the resolution, with more pels providing a more detailed image. The pels are arranged on the screen in horizontal lines, with a specific number of pels per line.Although some standard CCTV cameras are called “digital,†the output of the camera is an analog signal, suitable for viewing on standard CCTV monitors. While a CCD imager receives the light coming through the camera lens and outputs digital information, this stream of ones and zeros is converted into an analog output for standard video use.

In order to transmit a video stream over a network, the individual images must be converted from their analog state to binary computer language. This process is called digitizing. Each pel signal is sampled by the digitizing software, which converts the brightness and color signals into a binary data stream.

Lossy & Lossless Compression

After the analog signal has been sampled and converted into a digital binary code scheme, the compression process begins. Compression of video signals can be achieved by a number of methods. Some methods are lossy, achieving file size reduction by eliminating some information from the image, while other methods are lossless, as they retain all original image information. These compression technologies are often combined to produce the best possible picture with the smallest file size. Popular compression formats such as MPEG can use both techniques to reduce file size, and users can set certain parameters within the compression codec, which is a computer term for any technology that compresses and decompresses data.

While security installation companies will generally be primarily concerned with overall picture quality, frame rate, and bandwidth requirements, the issue of lossy versus lossless compression is important to consider. If the stored video images are to be potentially used for evidence, then lossless compression is a better option, as no parts of the images are removed.

Decimation

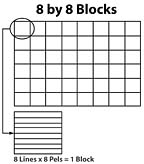

Typical compression codecs will divide the image into 8 by 8 blocks of pels, and will separate the black-and-white brightness component, called the luminance block, from the two color components, called the hue and the saturation components. These two color components together are called the chromatic blocks. Each of these components is processed as an individual 8 by 8 grid of data.The human eye is more sensitive to dark and light than to slight color changes. Compression codecs can take advantage of this situation by discarding a portion of the color components. This process is called decimation, and is included in the concept of scaling.

Horizontal decimation is the process of discarding every other line of the color components in the chromatic blocks. As there is twice the amount of chromatic data as luminance data in a set of blocks, this process alone can greatly reduce file size.

More compression can be achieved by only keeping every other horizontal and vertical chromatic data bit. This is called horizontal and vertical decimation.

Scaling

Reduction of the data to be compressed through decimation, and the number of blocks by which the uncompressed image is to be processed, is performed within the process of scaling. Usually selectable through software, the level of scaling determines the quality and resolution of the image after passing through the codec.Scaling defines and adjusts the amount of information that will enter the codec for further processing. It’s important to remember that once the picture is scaled, whatever information is lost through the decimation process is gone forever. The compression process will not improve the picture, and likely will degrade it further.

The goal of a compression technology is to reduce the file size. In the scaling process, the image is divided into a certain number of squares. If the image is of a person in front of a white wall, many of the blocks in the image will be of the white wall. Instead of transmitting each and every white color block, compression codecs will transmit one white block, with instructions as to where the multiple blocks of white should be placed on the reconstituted image grid of the viewing display.

Another method of video image file size reduction takes advantage of redundant segments within a stream of pictures. If a politician is giving a speech on television, the background stays virtually the same as the politician proselytizes. Compression codecs take advantage of this phenomenon, and will only transmit the changes in successive images. As this information is redundant, and will be reconstituted at the receiving end, the quality of the video is not adversely affected by the removal of temporal redundant information.

Once the image has been scaled, various complex mathematic programs are used to further reduce the file size. These are typically standardized, based on the type of compression used.